Issue #1: Multiple Versions of Bundled Code

Our custom architecture shattered every page into independent pagelets (i.e. subsections of pages), resulting in multiple JS entry points per page, with each servlet being served by its own controller on the backend. This empowered teams to deploy faster and more independently, but the trade-off was architectural insanity where different sections of a single page could run on different backend code versions.

It required our architecture to support delivering separate versions of packaged code on the same page, which resulted in consistency hell (e.g. multiple instances of a singleton being loaded on the same page). Eliminating pagelet architecture was our non-negotiable first step. It set the stage for flexibility and stability to adopt an industry-standard bundling scheme.

Issue #2: Manual Code-splitting

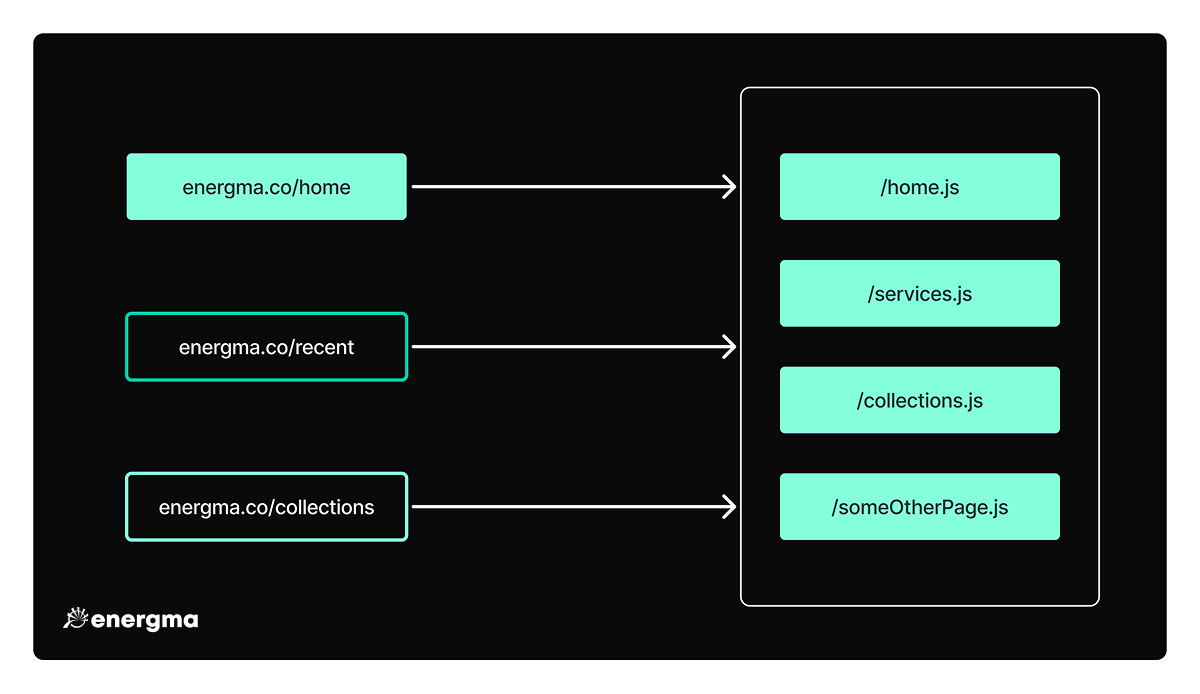

Code splitting is the essential process of slicing a massive JavaScript bundle into smaller chunks, so that the browser only loads parts of the codebase that are absolutely necessary for the current page. For example, assume a user visits Energma and then our services. Without code-splitting, the entire bundle.js is downloaded and forced on the user upfront which can significantly drop performance.

All code for all pages is served via a single file

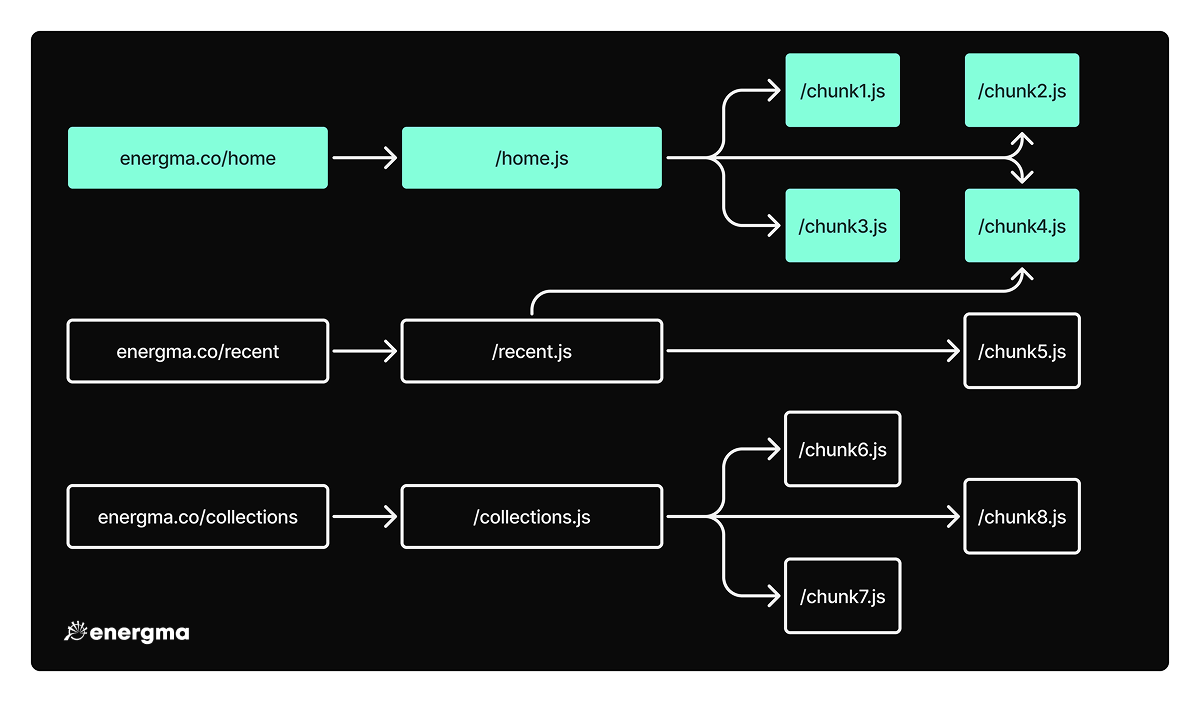

After code-splitting, the browser only downloads what's essential. This unlocks nearly instant navigation to our homepage, as the browser parses and executes a fraction of the code. But, the benefits compound.

- Critical scripts are loaded immediately, rendering content faster

- Non-essential scripts loaded asynchronously without blocking the user

- Shared code is cached by the browser, making subsequent page transitions fast

- Reduced amount of JS downloaded

The collective impact is drastically reduced load times, a fluid user experience, and a foundation built for speed at scale.

Only chunks needed for the page are downloaded

Since our existing bundler didn't have any built-in code-splitting, engineers had to manually define packages. More specifically, our packaging map was a massive 6,000+ line dictionary that specified which modules were included in which package.

As you can imagine, this became incredibly complex to maintain over time. To avoid sub-optimal packaging, we enforced a rigorous set of tests, the packager tests, which became dreaded by engineers since they would often require a manual reshuffling of modules with each change.

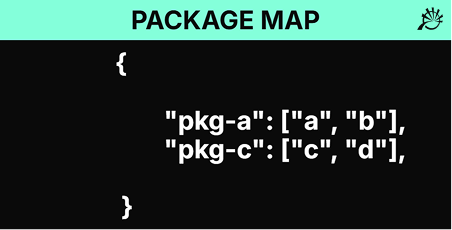

This also resulted in a lot more code than what was needed by certain pages. For instance, assume we have the following package map:

If a page depends on modules a, b, and c, the browser would only need to make two HTTP calls (i.e. to fetch pkg-a and pkg-b) instead of three separate calls, once per module. While this would reduce the HTTP call overhead, it would often result in having to load unnecessary modules—in this case, module d. Not only were we loading unnecessary code due to a lack of tree shaking, but we were also loading entire modules that weren't necessary for a page, resulting in an overall slower user experience.

Issue #3: No Tree Shaking

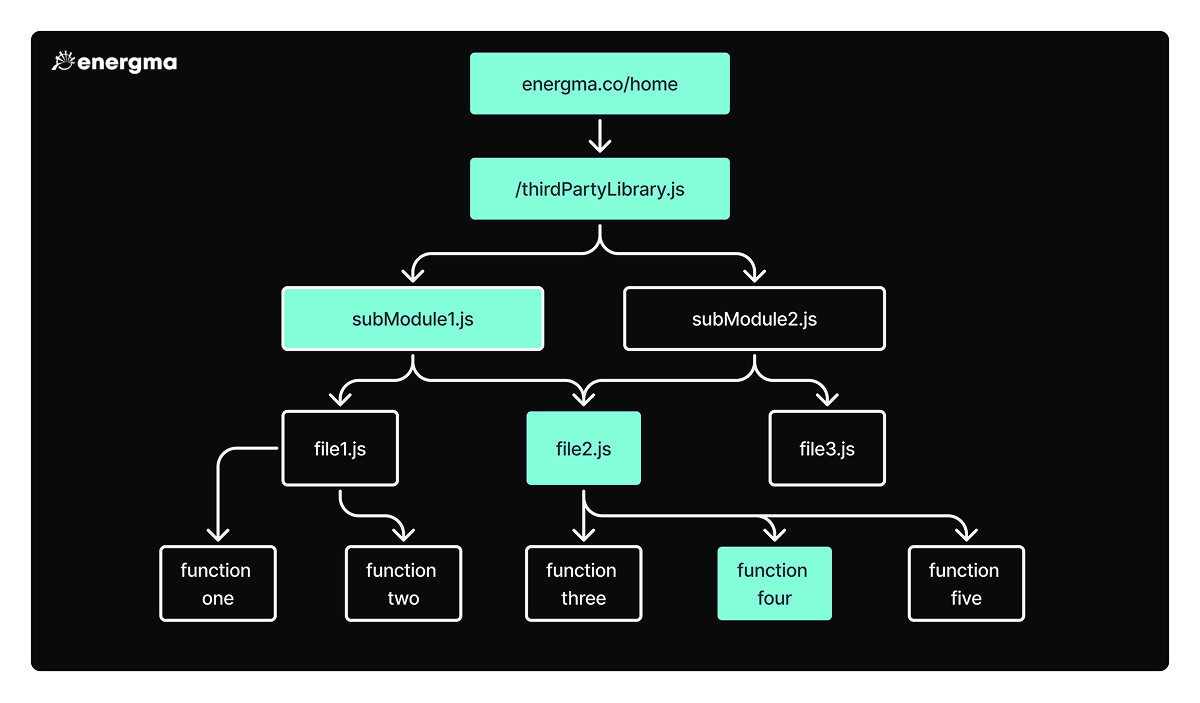

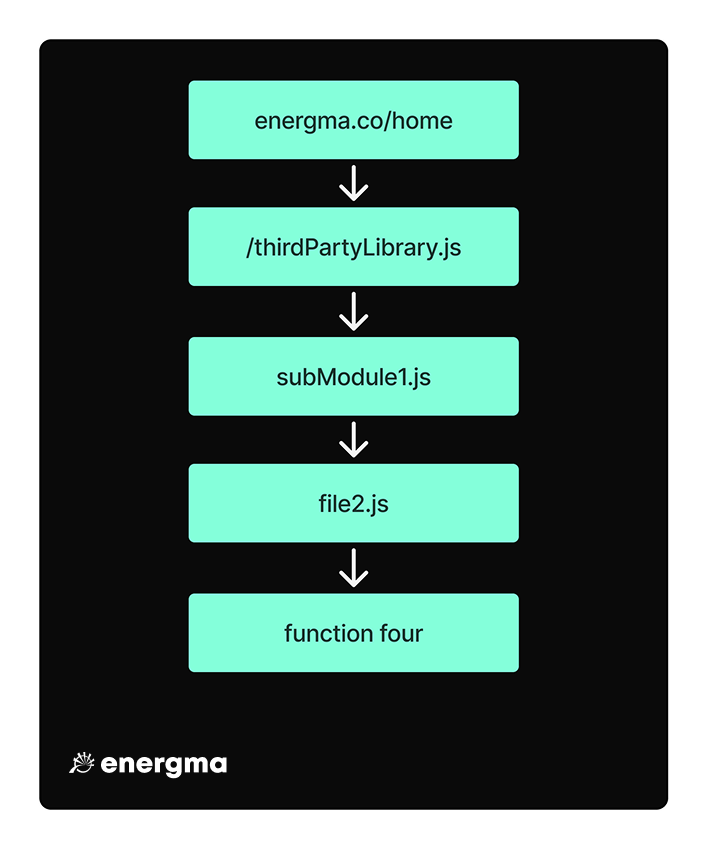

Tree shaking is a bundle-optimization technique to reduce bundle sizes by eliminating unused code. Let's assume your app imports a third-party library that contains several modules. Without tree shaking, much of the bundled code is unused.

All code is bundled, regardless if it's used or not

With tree shaking, the static structure of the code is analyzed and any code that is not directly referenced is removed. This results in a much leaner final bundle.

Only used code is bundled

Since our existing bundler was barebones, there wasn't any tree shaking functionality either. The resulting packages would often contain large swaths of unused code, especially from third-party libraries, which translated to unnecessarily longer wait times for page loads. Also, since we used Protobuf definitions for efficient data transfer from the front-end to the back-end, instrumenting certain observability metrics would often end up introducing several additional megabytes of unused code!